Shoving Your Application Code Into the Web Server: my talk at WebCamp Zagreb 2018

2018-10-11 in webcamp, talks

In 2018, I gave a talk on how to use Lua, nginx, and OpenResty as a full-blown asynchronous programming environment.

It's been a while since I've given a talk: my website says it's been more than 3 years. This year I've decided to break that streak, and submitted a talk called "Shoving Your Application Code Into the Web Server" to WebCamp Zagreb, and it got picked! The talk served as a short introduction to the nginx module system with a focus on OpenResty and its ecosystem.

The conference was a blast, as always. I wasn't in the organizing team this year, but the crew did an amazing job (again, as always). Zagreb is lucky to have an event with such a lengthy and strong tradition.

Below is a very approximate transcript of the talk along with the slides, skipping the boring introductory part where I talk about myself. It might contain some stuff I forgot to say during the talk, and it might be missing some stuff that I did say on the talk, but such is life. The structure was shamelessly borrowed from Maciej Ceglowski. The page will be updated with a recording once it's available.

Clicking on a slide will open a larger version in a new window. You can also download the PDF with all of the slides.

The Web Server

My day job mostly revolves around dealing with components that end up speaking HTTP, either between each other or with the outside world. And where there's HTTP, there's bound to be a web server.

If you're like me until a few years ago, you probably don't think about the web server too much. Sure, you may have tacked on a few modules, and you may have written a few neat configuration files... but once it's up and running, you probably think of it as this fairly stable black box that somehow turns incoming HTTP requests into something your webapp can understand. I hope one of the key takeaways of this talk that I'll offer to you will be that your web server can be used to do much more than that.

nginx

A prime example of a webserver is nginx. It's been built back in 1999 in order to tackle the so called c10k problem, which boils down to being able to handle 10 000 concurrent TCP/IP connections from a single machine. While this may sound trivial to you today with all of the hardware resources we have at our disposal, it was far less of a stroll 19 years ago.

Today, nginx powers between 30% and 40% of the public internet. If your little corner of the public internet isn't within this bracket, bummer — we'll mostly be dealing with nginx and its ecosystem throughout this talk. However, I hope this will at least make you consider using nginx for your next project.

There are two properties of nginx that will be very important for this talk. The first is that nginx is modular; i.e. it has an API and allows us to build extensions that augment its functionality.

The second important property is that it's built on an asynchronous, event-driven architecture, which is the key differentiating factor that allowed it to tackle the c10k problem in 1999, when most web servers used a thread- or process-based approach to handling multiple concurrent connections.

nginx has a very rich module system, encompassing modules for most of web-development-related tasks you might imagine. Parsing JSON, querying PostgreSQL databases and various other data stores, authenticating on LDAP servers — you name it.

The limiting factor is that the functionality of these modules is exposed through the nginx configuration language. While this is neat for those times when you simply want to map an HTTP location to a simple SQL query and return its result as a JSON object, doing any sort of serious business logic simply isn't viable with the configuration language nginx provides.

Occasionally, you just might want that tiny bit of non-trivial business logic in there. For example, you might want to authenticate on an LDAP instance and ask a Postgres DB about the upstream server to which you need to forward your HTTP request. Or, you might want to add some additional HTTP headers before forwarding the request further, with the headers depending on some heavy business logic. Or you might simply want to change the wire transfer format because something behind your web server doesn't speak plain old HTTP 1.1.

These are all legitimate requirements that might not warrant a whole webapp on their own, but might not fit into the architecture of your existing webapp (or webapps). Since you have all of the modules needed within nginx already, it would be really nice if you could somehow use them to do at least some of these tasks.

So, we come to the central question of this talk: what if we could script these nginx modules with something other than the nginx configuration language?

Let's put that aside for a moment and talk about Lua for a little bit...

Lua

Lua is a nice, small dynamic programming language with very clear semantics and a minimum of two amazing implementations. It's not entirely unlike JavaScript, but back when it was originally conceived, it was meant to compete with Scheme and a neat little language called Tcl.

It's written in ANSI C, which means it's highly portable: you can run Lua on any system that has a compliant compiler, which is basically any system in existence. Lua is also trivially embeddable, meaning you can simply include it into your existing C/C++ app and use its beautiful API to allow your users to script your software.

Another thing Lua has going for it is LuaJIT, which is arguably the best dynamic language just-in-time compiler in the world. This, of course, comes with a 400-page disclaimer, but whether you're convinced or not, LuaJIT is an engineering marvel: it's an insanely well-optimized and performant VM worth looking into.

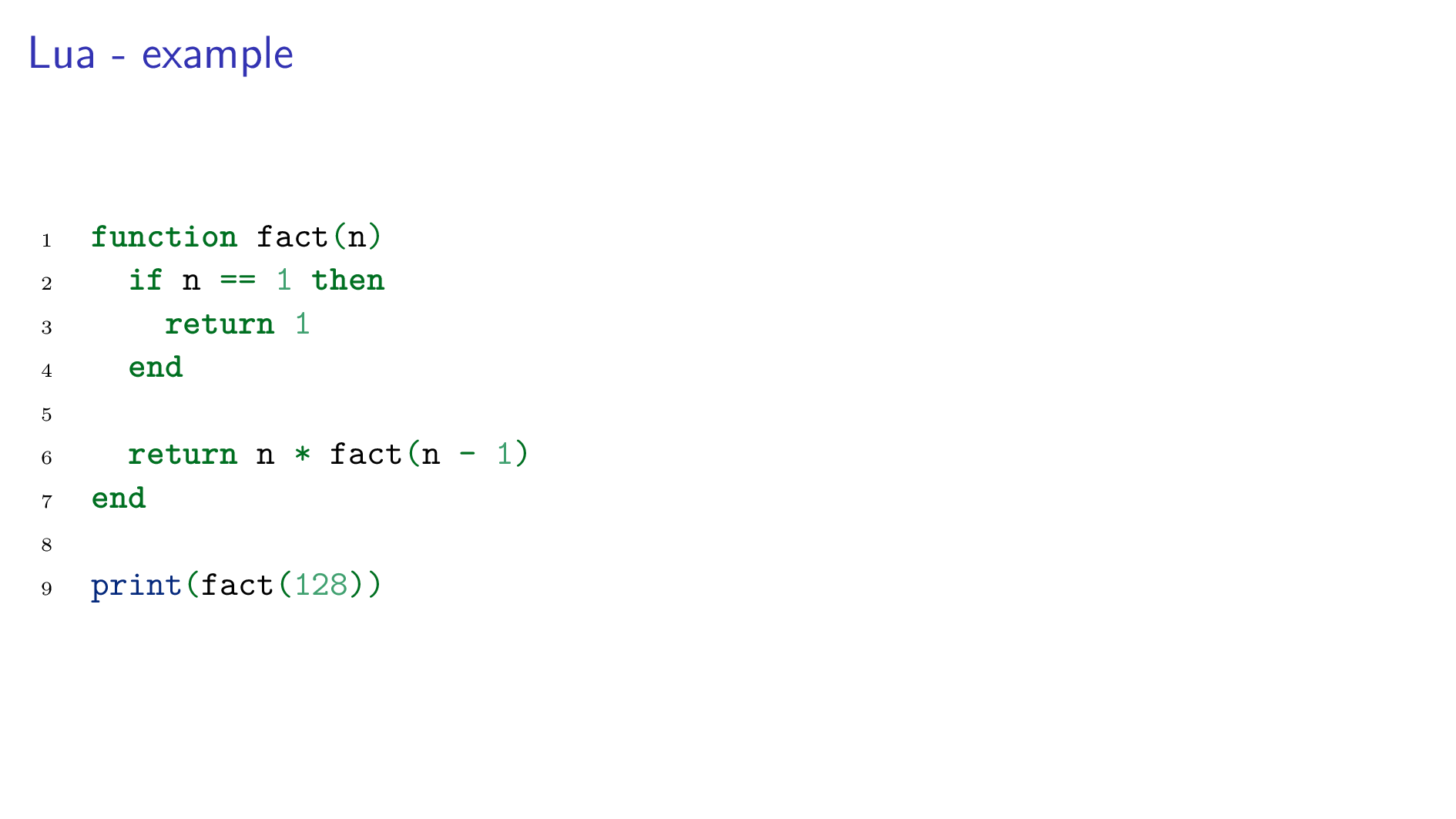

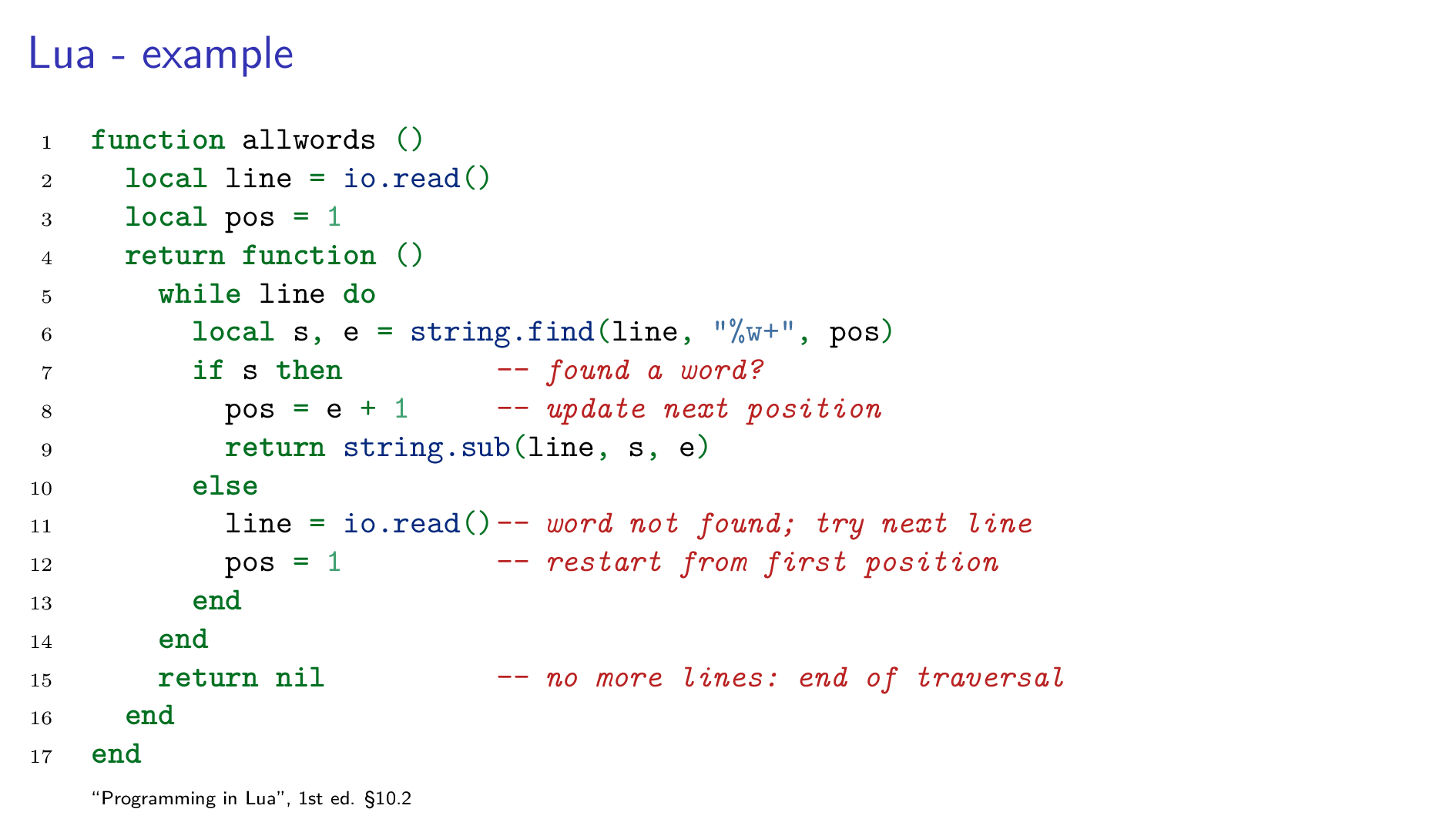

Here are a few examples of Lua which should allow you to get a feel for the language if you've never seen it before.

This first example is a very obvious factorial function, nothing too exciting...

This other example, shamelessly borrowed from Programming In Lua, 1st ed., outlines some less obvious features of the language like the module system and first-class functions.

So, we know that Lua is easily embeddable in C, and we also know that nginx is written in C... The question kinda asks itself: what if we could use Lua to script some of those nginx modules (and more)?

ngx_http_lua_module

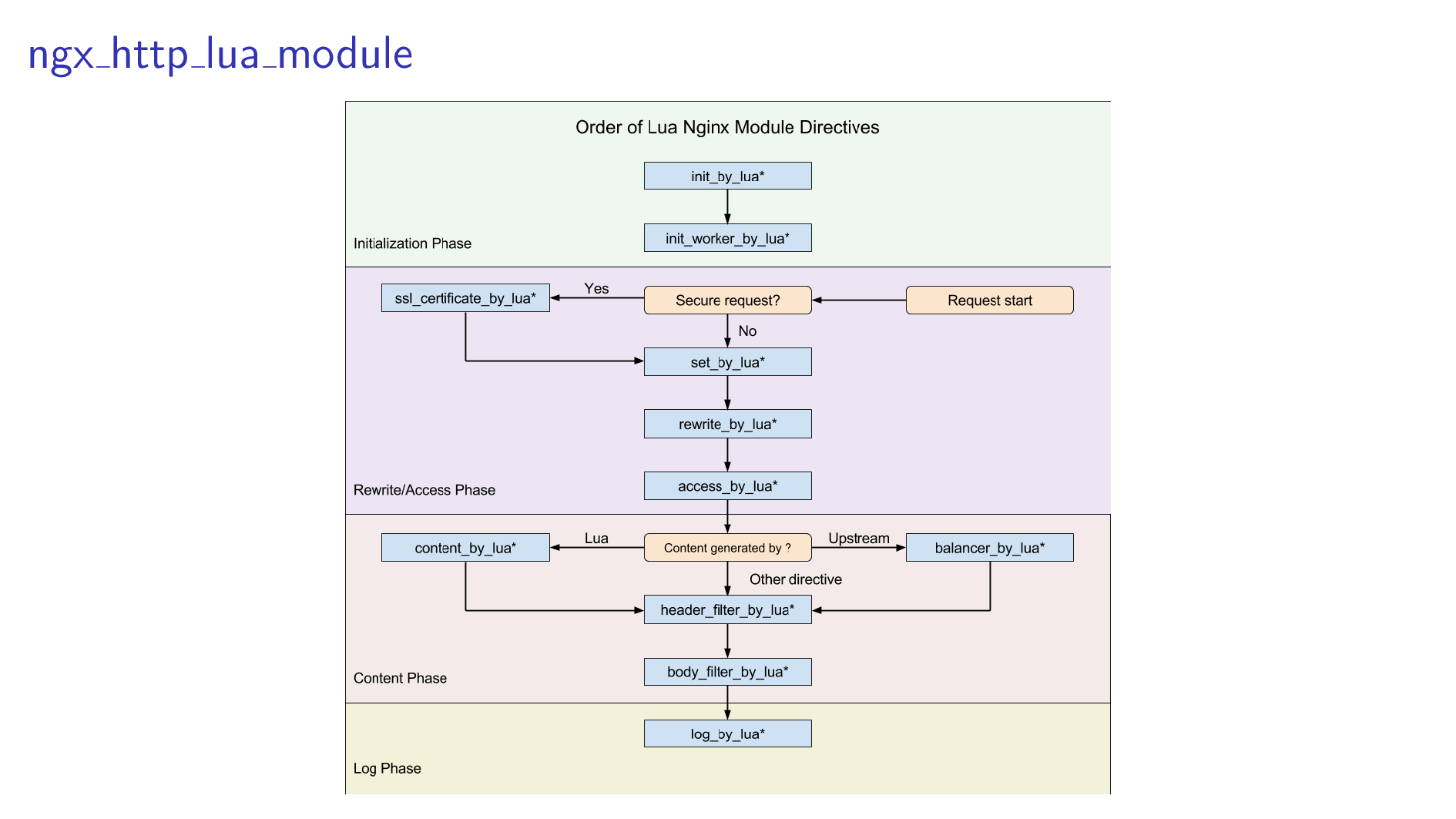

Luckily, far more capable people than me already came to this brilliant and completely non-obvious conclusion, and built ngx_http_lua_module. This module allows us to embed Lua code in various phases of nginx's worker process, displayed in the handy chart on the slide.

The two big ones we'll be dealing with today are content_by_lua and balancer_by_lua just because they're the most obvious, but all of them are fairly useful.

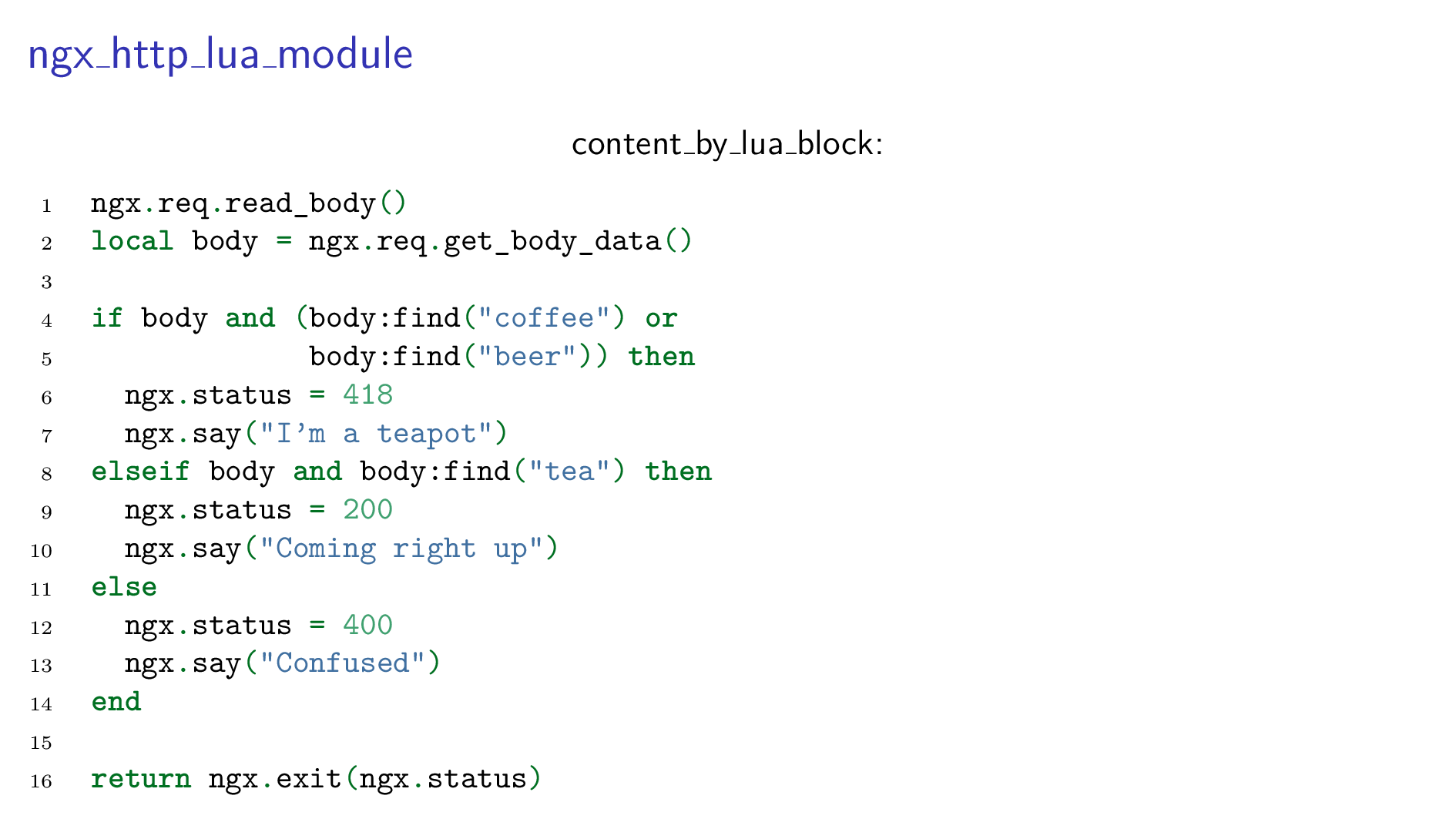

Let's say you've been tasked by the business with a very important task of coming up with a /brew endpoint that:

- responds with HTTP 418 "I'm a teapot" if it finds the words "coffee" or "beer" within the response;

- responds with HTTP 200 "OK" if it finds the words "tea" in the response;

- responds with HTTP 400 "Bad Request" in any other case.

We’ve all been there.

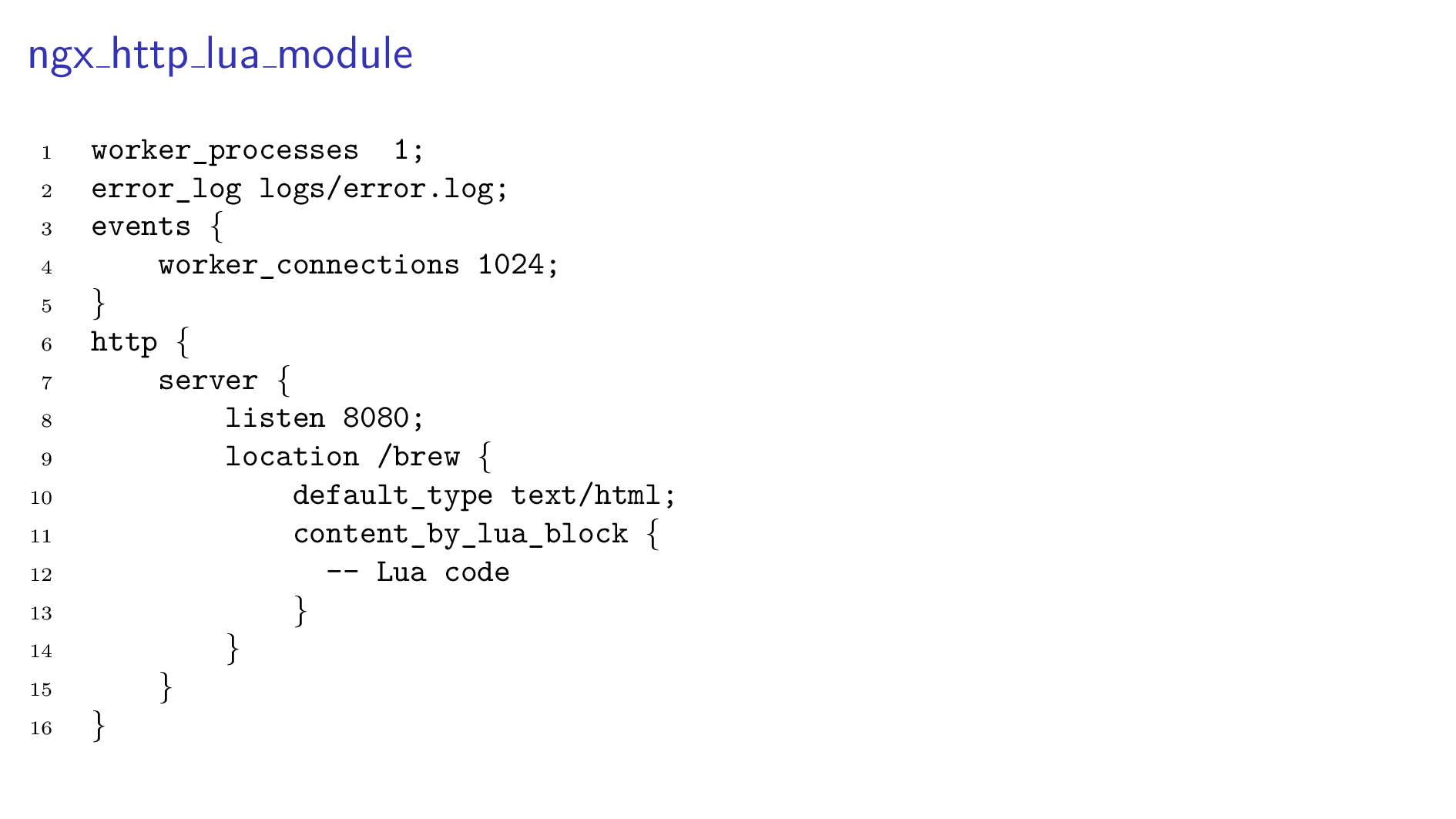

You could start with a fairly simple nginx configuration file like the one on the slide, the only real difference being the content_by_lua_block that allows us to execute arbitrary Lua code in order to respond to the HTTP request on this location.

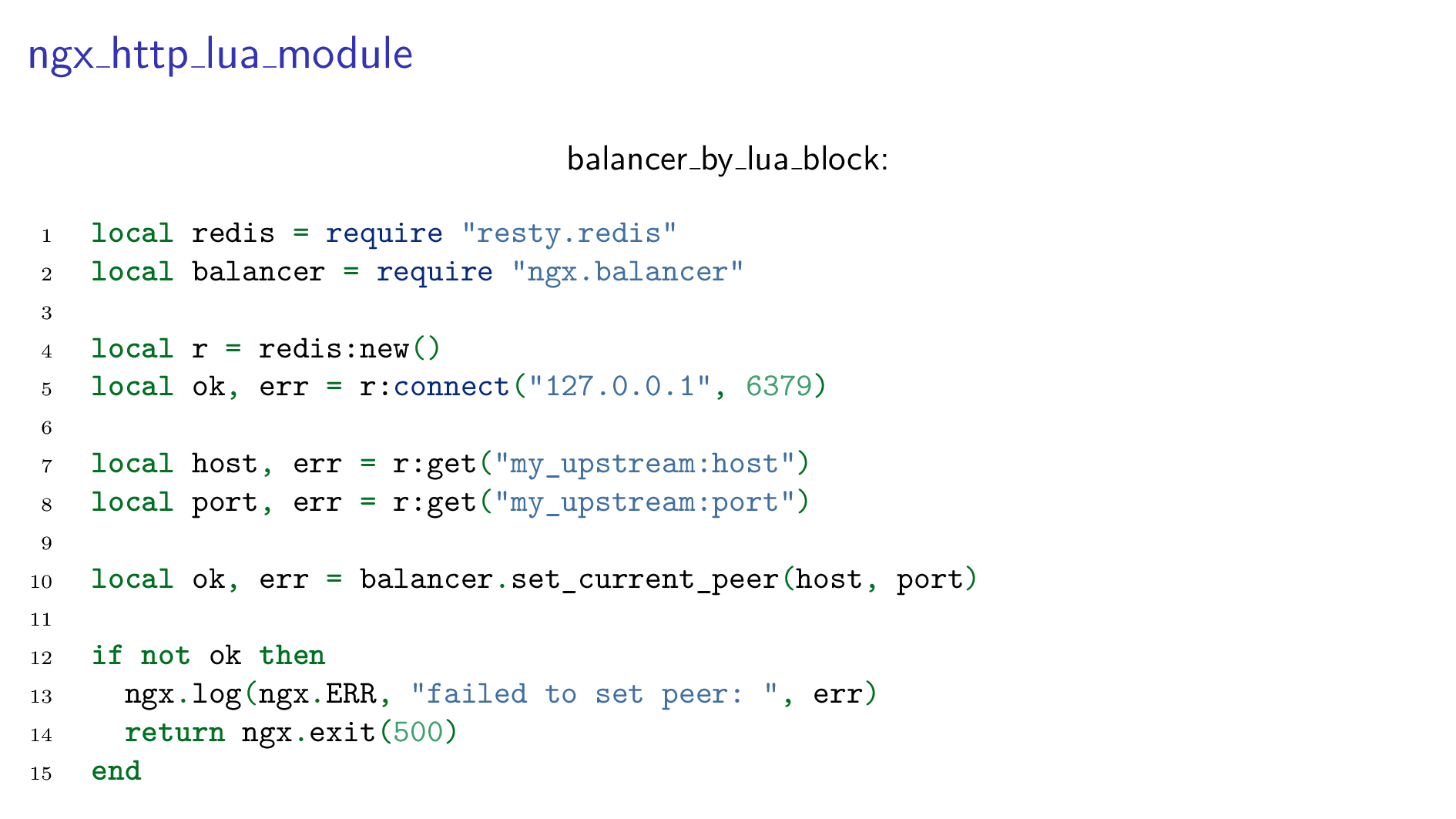

Now let's say you've been tasked with the far less common and far less important task of forwarding your incoming HTTP requests to an upstream that's dynamically defined in a Redis datastore.

You might start again with a fairly simple nginx configuration file, defining that all of your incoming requests should be forwarded to an upstream called backend. This upstream would be defined with a fake IP address, and a balancer_by_lua_block that would allow us to execute arbitrary Lua code in order to set the real upstream location.

...and again, the arbitrary Lua code might look something like this. This isn't necessarily executable and lacks a lot of error handling logic, but the gist of it is there: you query a Redis instance in order to get the upstream host and the port, and then use the exposed APIs in order to set the upstream peer to the parameters you just read.

This looks deceptively simple, and by simply looking at this you would probably say that this code is 100% synchronous. But, your Redis instance might be in China... or down. You wouldn't want your nginx worker process waiting for the Redis response! While it's waiting, it's unable to process any more incoming HTTP requests, basically murdering your performance (and, almost certainly, access to your webapp in general).

However, using the magick from nginx's APIs and ngx_http_lua_module, all of the resty.* and ngx.* APIs you see are completely non-blocking, allowing your worker process to keep on processing further HTTP requests.

OpenResty

I hope you're intrigued by now, but there's a catch: ngx_http_lua_module isn't included in nginx by default. This means you either have to depend on your operating system having a package (many do), or build it yourself.

Enter OpenResty!

OpenResty is a bundle that contains nginx, LuaJIT, and a bunch of non-blocking libraries that allow us to do many of the things we've talked about.

It has been used by the likes of Tumblr, Alibaba, CloudFlare, and many others. CloudFlare has since moved on from OpenResty, but it had used it for a long period of time for many of its load balancing needs; they have also employed the principal developer of OpenResty and financed a lot of the development behind the project.

Since ngx_http_lua_module is a fairly central part of this whole thing, you'll often times hear "ngx_http_lua_module" and "OpenResty" used interchangeably. Don't let this confuse you, and for all intents and purposes, this is the same thing.

While your ops practices may vary and you might want to build the entire thing yourself, OpenResty seems to be the preferred way of using nginx with the Lua module.

Non-trivial Usage

All of our examples so far have been fairly simple. I do hope no one believes that keeping your business logic in nginx configuration files is a good idea, though! In order to write actual working software with this, we do need a few more tools.

ngx_http_lua_module does allow us to load Lua code from files on the filesystem instead of hardcoded blocks in the config file: we can use *_by_lua_file instead of *_by_lua_block. This allows us to nicely structure the code using standard Lua mechanisms like modules.

Since we're using a standard Lua runtime within nginx, LuaRocks is fair game: we can use it to download & load 3rd party modules from the LuaRocks repos.

For testing, you can use standard Lua tools like busted to unit test your Lua modules. For end-to-end testing you can use Test::Nginx::Socket, a Perl module written by the author of OpenResty, which allows you to define your nginx configuration, HTTP request and HTTP response in a single unholy amalgamation of a file (gotta love Perl developers!). It will then load an nginx instance with that configuration, execute the HTTP request and assert the equality of the responses.

Since the focus of OpenResty is high-performance code, profiling is important. Again, the author of OpenResty has a nice set of SystemTap-based scripts which allow you to do all kinds of on-CPU and off-CPU profiling, and generate flame graphs in order to identify slow (or blocking!) parts of your code.

What's Not To Like?

One of the first things people notice when diving into any kind of Lua development is that the ecosystem is fragmented. LuaJIT is in maintenance mode, and is compatible with the reference implementation, PUC-Lua, until (give-or-take) version 5.2. PUC-Lua has since moved on, and is currently heading towards version 5.4.

Operationally, this isn't that huge of an issue: most of the community is focused on LuaJIT simply because of its insane performance. However, it's unclear whether this will be the case for forever.

The second problem is: it's not easy being asynchronous. Even in runtimes that push asynchronicity by default, like Go and node.js, it's not always easy to guarantee non-blocking behavior. This is even more prominent in Lua, where asynchronicity isn't the focus.

This can be remedied by only using the libraries from the nginx and OpenResty ecosystems that are built with this in mind. If this is not an option, an alternative is to dig into the nginx C API and write the library yourself in a non-blocking fashion, which may or may not be viable depending on your familiarity with C.

Final Words

With all of this said and done, we come to what could comfortably have been an alternate title of this talk: OpenResty gives you the ability to configure your web server in a Turing-complete programming language. But even more than that, OpenResty allows you to do it using a very comfortable, highly performant programming language.

In theory, you could write your entire webapp using OpenResty. In practice, this will heavily depend on the availability of async libraries and the overall fitness of your project to nginx's architecture. However, if you do have a project that fits into the asynchronous, event-driven nature offered by this ecosystem, I do hope this talk managed to convince you to give OpenResty a go.

Thank you!